Written by Dr Swaminathan Krishnan, Senior Data Scientist, Illumination Works

AI adoption hinges on its trustworthiness. Validation and testing help to build trust. But how truly trustworthy is the AI? Uncertainty quantification resolves this question, establishing a degree of trust in the AI and allowing for smart integration into rational decision making that results in definitive and consistent outcomes.

Uncertainty is Domain Specific

Functional Domain vs AI Domain. The real-world problems encountered in the data science space may be viewed from the lens of functional domains (healthcare, finance, marketing, retail, supply chain logistics, etc.) or the lens of AI domains, the specialized areas or technologies in the AI space such as natural language processing (NLP), computer vision (CV), and time-series modeling, which can be used to solve data-driven problems in any functional domain.

Uncertainty is both functional-domain specific as well as AI-domain specific. However, a lot of the uncertainty in functional domains arises from data quality and availability, physical system complexity that could bloat aleatoric uncertainty, our state of knowledge or our understanding of the underlying science, and so on, which are all important factors, but are beyond the control of a data scientist. From a data science perspective, it is more useful to view uncertainty from the AI-domain lens and understand its nuances in a few of these domains.

AI Domains. In this second installment of Illumination Works’ Uncertainty Estimation in AI thought leadership series, we will focus on uncertainty in the different AI domains. Here we give an overview of the nature of uncertainty that arises in three AI domains―NLP, CV, and time-series modeling. In the present day, all three domains use machine learning (ML) extensively. NLP uses ML to get computers to understand, interpret, and communicate in human languages. CV uses ML to visualize, understand, interpret, and create imagery and videos. Time-series modeling uses ML and/or classical techniques to analyze chronologically ordered data of phenomena or processes or measurements of physical quantities in which future values inherently depend on past values. We will wrap up the article discussing uncertainty in multimodal applications and approaches for visualizing uncertainty.

Uncertainty in Natural Language Processing

Crux of the Issue. Inherent ambiguity present in language (e.g., the fruit “apple” vs the company “Apple”) that may or may not be resolvable by context is a form of aleatoric uncertainty in NLP [1]. The key challenge in NLP applications involves equipping a computer to interpret human language through an analysis of text and speech data and possibly respond in real time. This involves training an algorithm by breaking the data into smaller parts and tagging these parts according to contextual usages, such as nouns, verbs, adjectives, or adverbs, getting the computer to recognize meaningful relationships among them. Unfortunately, this process becomes challenging in the presence of noise, and hence the need to quantify the uncertainty associated with the computer’s interpretation of a given language segment.

Noise in NLP. Forms of noise in the data include out-of-vocabulary (OOV) words and distracting words or phrases. OOV words could be spelling mistakes or newly emerging words that were not present in the tokens used to train the NLP system. Distracting words or phrases are redundant and may be safely removed from a sentence while still retaining the meaning conveyed by the original sentence. Such words/phrases are often found in informal texts/novels to enhance the reading experience [1], but they tend to lower the effectiveness of an NLP extractor, and therefore, they may be treated as noise. Natural language is also context dependent. While different words/phrases can be used to express the same idea, it is also true that the same word can mean different things based on context. In machine translation, this would lead to ambiguity and hence uncertainty in the choice made by the translator. Thus, mapping words to ideas and vice versa can often be one-to-many or many-to-one, which presents itself as a source of uncertainty in NLP. Sarcasm is a good example of this. The phrase, Yeah right, taken literally indicates affirmation, whereas taken sarcastically indicates negation, a diametrically opposing outcome.

Notorious Accuracy vs Overfitting Tradeoff. NLP applications such as search engines also often encounter OOV queries that the application was not trained on and therefore are unable to yield reliable results. Deep neural network model architectures can have a significant effect on uncertainty. Complex architectures can result in overfitting to the training data, tracking each and every nuance in the training data, but may perform quite poorly when presented with unseen data. System architectures that are typically manually designed have to balance this tradeoff between training accuracy and overfitting, a nontrivial task when there is limited control over the unseen data, which can be vastly different from what was used in training the model. Uncertainties may arise when generating NLP output as well. For instance, as the number of categories increases (increased classification granularity), it starts to get harder to distinguish between the categories resulting in an increase in classification errors and increasing model uncertainty. Also, NLP model training employs sequence labeling in order to account for the semantics (context). The labels assigned to an element may end up depending upon the labels assigned to the neighboring elements in the sequence. Errors in labeling one element spill over to neighboring elements. Likewise, the generation process itself, which involves a search over hundreds of millions of model parameters, can be error prone.

The Solution. Uncertainty estimation methodologies may be calibration-confidence based, sampling based, or distribution based [1]. The calibration confidence-based method of measuring the accuracy of the predicted probabilities against the true probabilities was discussed in Part 1 of this article. Sampling-based uncertainty characterization involves sampling the posterior distribution derived from a Bayesian model extending ideas found in the popular Markov Chain Monte Carlo class of methods. Distribution-based methods make an assumption of the probability distribution characterizing the random process (often taken to be the Gaussian or the Normal distribution) and using the mean, variance, and higher-order moments (if relevant) of the distribution to characterize uncertainty.

Uncertainty in Computer Vision

Crux of the Issue. In computer vision applications, two primary questions of interest are: what objects are present in an image and where in the picture/frame are they located? These are usually tackled through image segmentation (the creation of segmentation masks) where each pixel of the image is assigned a color based on its semantic class. A segmentation mask is simply a portion of the image that has been differentiated from other regions of the image. This segmentation is achieved through deep learning (neural) networks that are comprised of layers of interconnected nodes (neurons). Each node acts as a switch or a gateway that modifies the information passing through it, slicing and dicing the input data into a set of output classes by the time the information exits the network. The modification at each stage is achieved through weighted nonlinear mathematical functions.

The Solution. Uncertainty can be modeled by replacing the deterministic network’s weight parameters with distributions over these parameters, and instead of optimizing the network weights directly, averaging over all possible weights and using the variance of the distribution to say something about the uncertainty in the model predictions. This Bayesian approach is the most effective method to quantify uncertainty in CV applications. While classical Bayesian models have historically treated all uncertainty as epistemic through probability distributions on the parameters of the ML model, more recently, researchers have tried tracking aleatoric uncertainty separately from epistemic uncertainty in CV applications by modeling the probability distribution of the error in the predicted output of the model [2].

Uncertainty in Time-Series Modeling

Crux of the Issue. Time-series modeling is another application where uncertainty quantification is crucial. A time series is a data series that tracks a measure over time, for example, the spot price of a company stock, the temperature at a given location, product inventories, product sales, and so on. The future typically depends on the past as far as these measures are concerned. Forecasting these quantities holds immense value for decision making in these business domains. Equally important is to characterize the uncertainty associated with the forecasts as this will allow decision makers to weigh upside and downside risks associated with decisions made based on these forecasts.

The Solution. Uncertainty in the classical time-series forecasting methods such as autoregressive (AR) models, where a future value is a linear combination/regression of values at specific time intervals from the past, and its variants (ARIMA, SARIMA, etc.) is captured by assuming the linear regression model coefficients to be normally distributed, perturbing the model parameters to upper and lower bound values at various levels of confidence, and using them to develop upper-bound and lower forecasts for time series. A decision maker may treat the interval between the upper and lower bounds of the forecast as the range of plausibility for the forecast at the desired degree of confidence.

Even when state-of-the-art deep-learning methods [e.g., long short-term memory (LSTM) networks] are used for time-series forecasting, the uncertainty associated with the forecasted future values is characterized by way of upper- and lower-bound values. However, the approach taken to arrive at these bounds is distinctly different as these methods rely on training, validation, and test datasets, prepared by stacking clipped windows of the past values shifted at specific strides along time. Assuming the errors on the forecasts for the test dataset are normally distributed, the resulting distribution may be used to develop upper and lower bounds for the forecasts.

Uncertainty in Multimodal Applications

Crux of the Issue. There are several applications where we need to lean on a combination of AI domains to build our solution. These are known as multimodal AI applications. Consider the application of information extraction from drawings. For this application, NLP and CV may be leveraged in tandem, with CV being used to extract contents from the drawings and NLP being used to process the contents to extract information. Another use case for multimodal engagement is the tracking of shifting narratives and/or sentiments in social media conversations. Here NLP may be used for the content extraction from social media and time-series modeling may be used to track the changes in sentiment occurring over time. A similar application leveraging CV and time-series modeling can be conceived for extracting content from streaming video and tracking the information in time for actions to be taken. How do we combine the uncertainty from each stage/mode in such applications?

The Solution. While careful propagation of uncertainty from one stage to the next is the answer, this is easier said than done. As uncertainty is propagated from one mode to the next, it must be recast to fit the structure and form that is most suitable for the receiving mode/stage. One can conceive multistage Bayesian neural networks to handle all of the modes. This is an active area of research and we at Illumination Works stay abreast of these developments so we can incorporate them into our solutions when they are published in technical literature.

Uncertainty Visualization

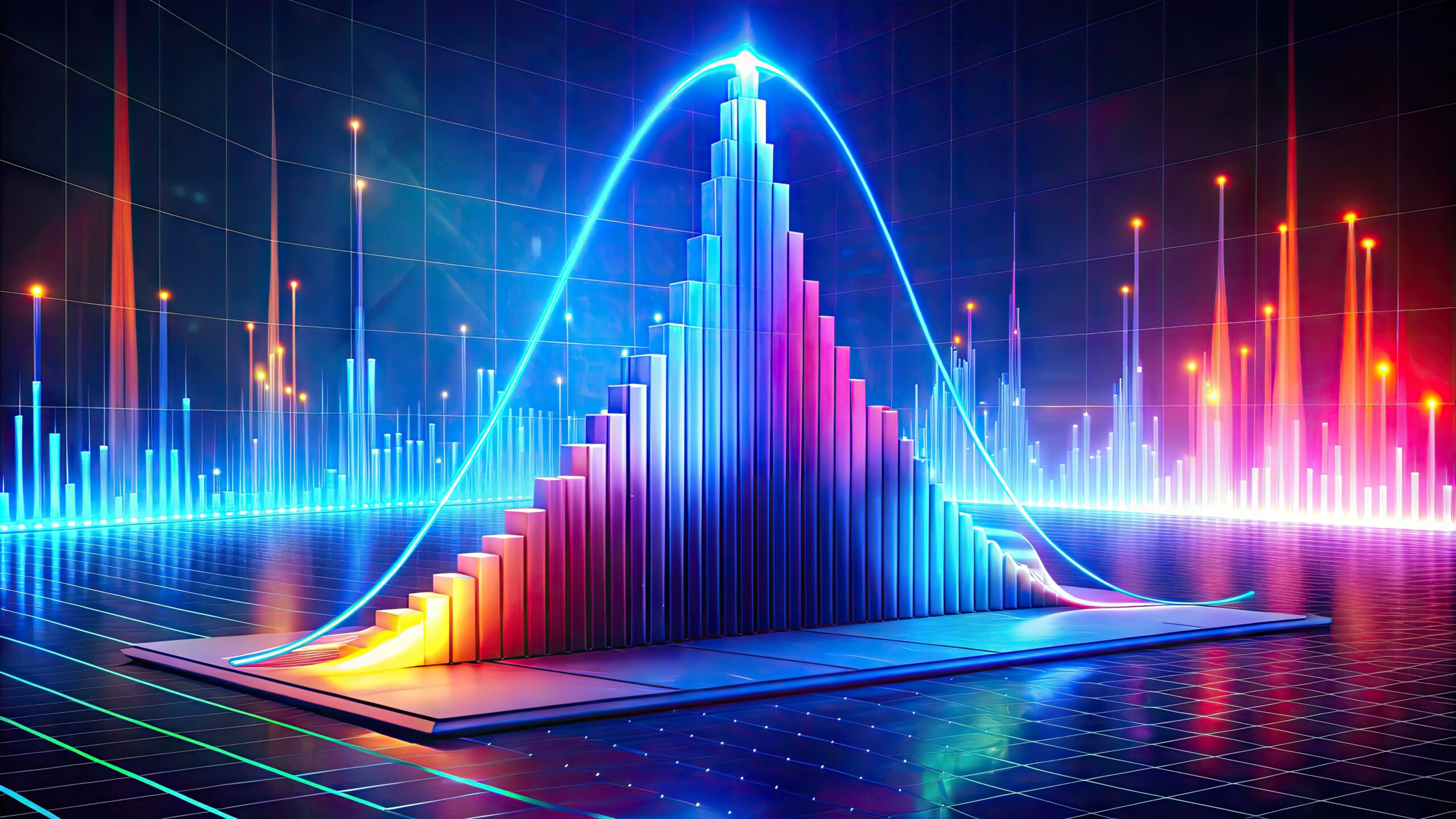

Making the Findings Accessible. Uncertainty, by its very nature, is a hard concept to wrap one’s head around. It is then crucial to present the complex numeric entities representing uncertainty in a visually appealing and intuitive manner if they are to be adopted by end users and integrated into their decision-making processes. Front-end dashboards are a convenient tool for this.

Dashboarding or user interface (UI) development is a creative endeavor. There is no one glove that fits all applications. Broadly speaking, it typically includes information layers the user can turn on and turn off to enhance visual clarity and sectional views of the data (holding all dimensions constant except two dimensions).

Displaying the underlying data as point clouds (scatter plots) or bar charts and overlaying model outputs (e.g., averages, forecasts) along with the corresponding uncertainties as error bars (or intervals or bounds) is usually quite effective in quantitative applications [3]. Bell curves showing the distribution of data and its spread about the mean is another effective way of communicating uncertainty. Peaked (narrow) bell curves and narrower error bars (or intervals or bounds) are indicative of greater certainty while broader/wider curves (flatter hills) are indicative of greater uncertainty.

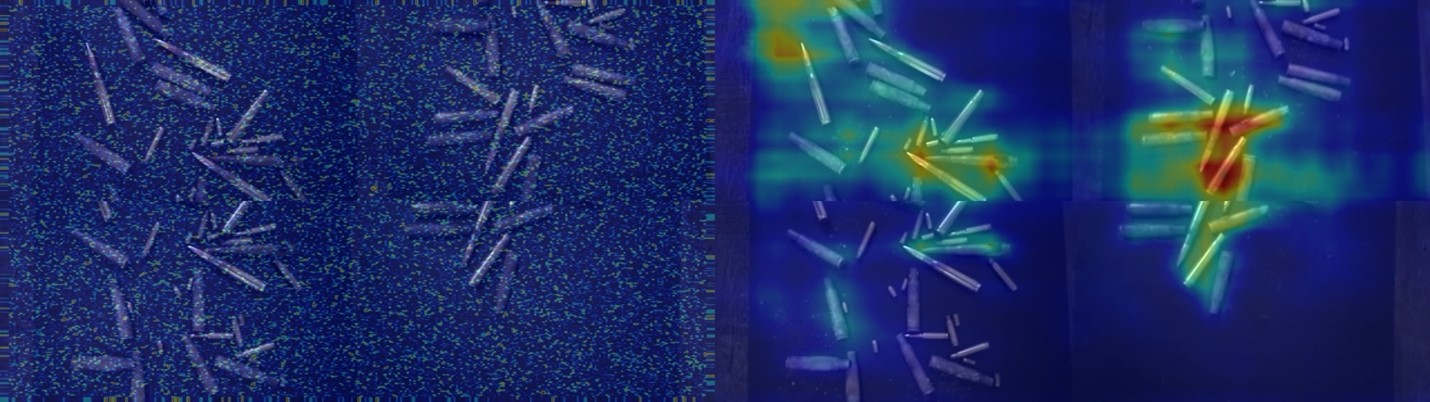

In NLP and CV applications, heatmaps/color ramps representing various levels of uncertainty can serve as effective surrogates to confidence ratings. Adequate consideration is usually given to visual separation when uncertainties along multiple dimensions are to be displayed on the same screen such as pixel value prediction uncertainty, object identification and segmentation uncertainty, and object location uncertainty in an image or video in CV applications. The image below shows a heatmap from CV work Illumination Works performed leveraging our Theia™ Data Labeling and Curation solution.

Summary

Following on the heels of Part 1 of this article where we introduced the notion of uncertainty and its relevance in data science, here in Part 2 we illustrated what uncertainties exist in three of the most commonly occurring AI domains—NLP, CV, and time-series modeling/forecasting—and how they are handled in the modeling.

Illumination Works has implemented many of the elements presented in this article into our solutions and will continue to train our clients on their effective use in practice. Illumination Works has a dedicated Data Science Practice comprising individuals trained in science, technology, engineering, and mathematics disciplines, who work collaboratively to solve our clients’ toughest data analytic challenges. Our Data Science team utilizes cutting-edge AI and ML techniques, advanced analytics founded in comprehensive statistical methodologies, and flexible data visualization to enable our clients to gain insights from their data, solve business problems, and mature the value of client data by uncovering the needed information for improved real-time decision making. Our data science team is at the forefront delivering value-driven analytics and predictive capabilities with proven results, providing customers with accurate and meaningful data for insights and predictions that result in quick wins and long-term success.

About the Author

Swaminathan Krishnan, PhD, Senior Data Scientist.

Dr. Krishnan is a Senior Consultant at Illumination Works developing AI, ML, and data science applications for the U.S. Air Force and the Air Force Research Laboratory. He has a Ph.D. degree from the California Institute of Technology, an M.S. degree from Rice University, and a B.Tech. degree from the Indian Institute of Technology. Prior to joining Illumination Works, Dr. Krishnan was on the faculty of Manhattan University and Caltech, a Fulbright-Nehru Visiting Scholar/Professor at the Indian Institute of Technology Madras, and on the building engineering team at the international multi-disciplinary firm Arup. His current interests and expertise span the data science areas of time series forecasting, anomaly detection, NLP and large language models, and CV. Dr. Krishnan has published extensively in international engineering journals and was awarded Illumination Works’ Brightest Light Award in 2023.

Special thanks to the contributors and technical reviewers of this article:

- Janette Steets, PhD, Director of Data Science

- Srini Anand, Principal Data Scientist

- John Tribble, Principal Data Scientist

- Cathy Claude, Director of Marketing

If you liked this article, you might also like:

- Maximizing the full potential of AI with performance and scalability Read article

- Revolutionizing the AI landscape with retrieval-augmented generation (RAG), knowledge graphs, and quantized large language models (LLM) Read article

- Beyond Dashboards: The psychology of decision-driven business intelligence/business analytics (BI/BA) Read article

Sources referenced in this article:

- Uncertainty in Natural Language Processing: Sources, Quantification, and Applications. Mengting Hu, Zhen Zhang, Shiwan Zhao, Minlie Huang and Bingzhe Wu. https://arxiv.org/pdf/2306.04459

- What Uncertainties do we Need in Bayesian Deep Learning for Computer Vision? Alex Kendall and Yarin Gal. 31st Conference on Neural Information Processing Systems, NIPS 2017, Long Beach, CA, USA. https://arxiv.org/pdf/1703.04977

- Fundamentals of Data Visualization. Claus O. Wilke, O’Reilly. https://clauswilke.com/dataviz/

- Image Credits: All images sourced from stock.adobe.com with the exception of the computer vision heatmap image, which was produced leveraging ILW’s Theia™ Data Labelling and Curation solution.

About Illumination Works

Illumination Works is a trusted technology partner in user-centric digital transformation, delivering impactful business results to clients through a wide range of services including big data information frameworks, data science, data visualization, and application/cloud development, all while focusing the approach on the end-user perspective. Established in 2006, the Illumination Works headquarters is located in Beavercreek, Ohio, with physical operations in Ohio, Utah, and the National Capital Region. In 2020, Illumination Works adopted a hybrid work model and currently has employees in 20+ states and is actively recruiting.