Organizations require a sophisticated data infrastructure strategy with cutting-edge scalability and performance measures to illuminate and realize the full potential of their data universe by transforming the data into actionable information.

Our Inherent Drive for Deeper Understanding

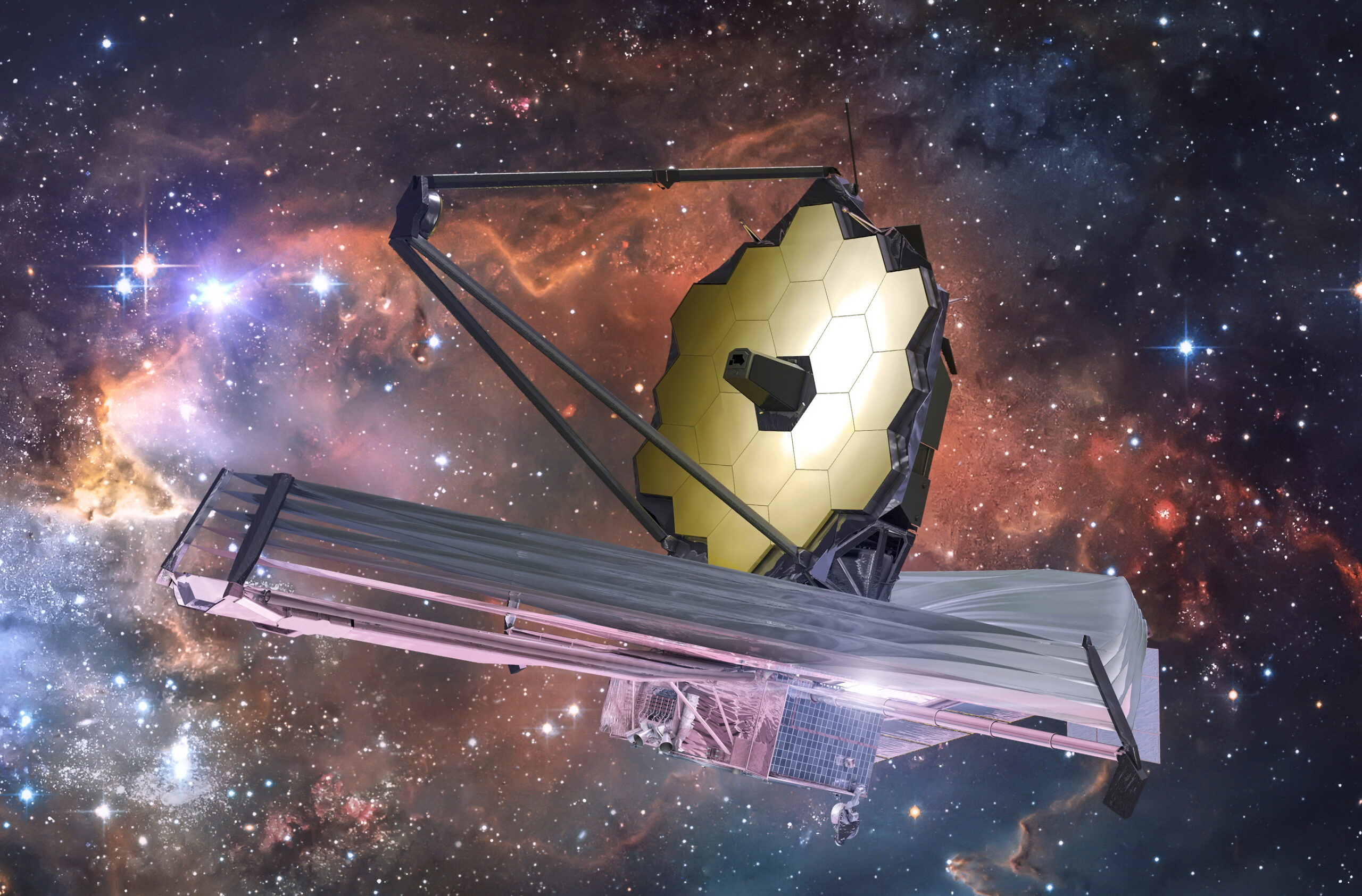

Curiosity is human nature. Imagine yourself somewhere off the grid far from the hustle and bustle of the internet of things (IoT) that flood your life daily. You are in the middle of nowhere on a clear crisp dark evening. You look up to the never-ending night sky full of an infinite number of stars. To the naked eye, we have no way of making sense of this vast universe. Seeking deeper insights, astronomers developed the telescope to gain a better understanding. Today, the highly sophisticated James Webb Space Telescope developed in 2021 offers astronomers an even more profound view of the universe and this desire to advance our understanding will continue to evolve.

Similarly, the endless overload and complexity of large data sets requires organizations to advance their lens with a solid data infrastructure strategy. With the right strategies in place, organizations can maximize the full potential of their data – shining a light on a new window of possibilities for deeper understanding, illuminating insights into what is still unknown, and enabling improved decision making and business advantage.

Synergizing Teams & Tech for Data Insights

Understanding versus information. Since the dawn of smart phones and with each new device that comes onto the IoT scene, the amount and complexity of data has exploded. In the book Blink by Malcolm Gladwell, he shares “We live in a world saturated with information…but what I have sensed is an enormous frustration with unexpected costs of knowing too much, of being inundated with information. We have come to confuse information with understanding.”

This sentiment reigns painfully true with organizations finding themselves surrounded by data without a straightforward place to begin to understand the core value of their data. This new “gold” for insights manifests in various forms of sources hidden deep in the bowels of data architectures, the mysterious “cloud,” and hundreds of databases – all waiting to be mined for increased knowledge and insights for business advantage.

Bringing together the star data team. The right team combined with the right data infrastructure strategy is a game-changing enabler to successfully transform large complex data sets into actionable insights. The working dynamic of data teams has evolved with data engineering and data science workflows becoming more intertwined.

Data scientists transform data into value leveraging AI, ML, and complex statistical modeling, relying heavily on data engineers to provide the full breadth/depth of available data in an easily consumable, flexible, scalable, and performant manner. The data architect is the team’s quarterback and facilitates these intricate and integrated workflows from the perspective of system scalability and performance, while the software engineers provide the mortar or glue that holds everything together, building and maintaining applications in fully functional software states.

Key data infrastructure strategies. The complexity of noise attributed to the sheer quantity of data makes it vital to an organization’s survival to have key data infrastructure strategies in place to manage the unprecedented influx of data. When worked synergistically, the following strategies provide an enhanced in-depth view for a deeper/clearer understanding of their data assets by extracting the core value from their data and enabling the full potential of AI.

Data infrastructure strategies enable data professionals to:

- Wrangle/process the near unlimited amounts of large/complex data in a more streamlined, automated, and reliable manner

- Sift through and make sense of this vast amount of data

- Achieve transparency and ethical use of their data through data provenance

- Build AI/ML solutions in a scalable and performant manner

- Ultimately enable the full potential of AI/ML solutions

Deep Dive: ML & Data Infrastructure Strategies for Performance & Scale

DataOps architectures and frameworks result in at least 10X faster processing and scalability when implemented with a strategic mindset

Key DataOps Capabilities

- Data quality assurance

- Data governance

- Data security

- Testing frameworks

- Error handling & debugging

⇒ 1. DataOps Frameworks & Architecture

The DataOps framework and architecture is a structured approach to design and implement AI/ML solutions that process, store, and analyze large complex datasets.

Distributed cloud compute ecosystems. Allow data scientists and data engineers to load near unlimited quantities of data into memory (versus on disk) and perform analyses in parallel across clusters hosted on a cloud compute platform.

Data analytics and ML cloud platform tools. Provide integrated environments for data scientists, data engineers, and analysts to collaborate effectively while working with big data and ML applications.

⇒ 2. ML Model Development Optimization

With robust DataOps strategies in place, your organization is ready to exploit data and glean insights through ML. Implementing model development optimization techniques can substantially improve model performance and scalability.

Parallel processing & distributed compute. Working with the appropriate big data tools is crucial for optimizing the scalability and performance of AI/ML solutions as these tools allow for parallel processing and distributed compute.

Algorithm/model selection considerations. Implement model accuracy metrics and consider model run-time and cost when fit on large complex datasets. For example, a more computationally expensive model (e.g., Deep Learning Neural Network, XGBoost) may outperform a simpler, more computationally efficient model (e.g., Naïve Bayes) in terms of metrics like accuracy, precision, and recall. However, this model may cost more to implement in terms of money and/or time. Considering the organization’s priorities is an important step.

Data preprocessing & cleansing. Reduce data dimensionality to improve computational speed and potentially prevent overfitting.

Feature engineering. Leverage optimal extraction techniques, such as imputation, one-hot/label encoding, and dimensionality reduction (e.g. principal component analysis) to glean more meaningful information from data.

Model pruning. Reduce trained model size by removing certain parameters or features to potentially improve performance.

Basic code optimization. Identify performance bottlenecks using profiling tools to pinpoint areas for optimization.

ML model development aims to produce accurate, robust, and scalable models

Working with the appropriate big data tools is crucial for optimizing the scalability and performance of AI/ML solutions

An MLOps framework is vital for deploying and managing ML models at scale in a reliable and maintainable manner

Key MLOps Capabilities

-

Version control

-

Automation

-

Model packaging & automated deployment via CI/CD integration

-

Model tracking and management

-

Model monitoring

-

Software environment management

-

Security

-

Cost optimization

⇒ 3. MLOps Framework & AI/ML Support

The MLOps framework ensures streamlined and automated development, deployment, and maintenance of the ML workflow at scale and provides the necessary architecture to package and deploy models into production in a scalable, sustainable fashion.

Pipeline automation. Continuous integration and continuous deployment (CI/CD) is a comprehensive framework used to support MLOps and is often used at this stage to automate the pipeline and serve the model in production. CI/CD incorporates various strategies of ongoing maintenance and support of the entire AI/ML software solution, end-to-end from data storage to final business application.

Model performance monitoring. Monitoring the performance of AI/ML models in production is a critical component of MLOps as data patterns can change over time and result in a degradation in AI/ML model performance. MLOps frameworks are essential for ongoing maintenance and support of AI/ML systems and specific ML workflow components after implementation.

Model alerts, rollback & retraining. Monitoring and alerting are components of MLOps that allow for seamless and continuous monitoring of the model performance and versions of models in production. This helps with model monitoring, rollback, and retraining. In addition to the monitoring of performance metrics such as accuracy, precision, and recall, this also includes tracking anomalous responses, feature drifts, and other issues. MLOps also allows for a retraining of the model triggered automatically or performed manually.

Enabling the Full Potential of AI

By leveraging sophisticated data infrastructure strategies, AI/ML solutions are continuously improved in a robust and reliable manner with faster development and higher code quality.

Shining the light on new possibilities and deeper understanding. Without advanced telescope lenses, we would not know how vast/spectacular the universe truly is and where to even begin to understand the universe. We would be lost in the noise of the stars – magnificent even to just the naked eye yes, but a limited view with no improved understanding of how much more profound the universe truly is and the amazing treasures that lie within.

Benefits of Advancing AI/ML Solutions

- Reduced time to develop, test, and deploy software changes for quicker feature delivery

- Automated testing and code reviews to improve code quality and reduce introduction of bugs

- Automated deployment to ensure consistency across environments

- Quicker feedback loop to help developers catch and fix issues early in the development process

- Automation of repetitive tasks that include building, testing, and deployment to free up developer time to focus on coding and innovation

Much like the highly advanced James Webb Space Telescope, a sophisticated data infrastructure strategy that entails cutting-edge scalability and performance measures allows organizations to illuminate and realize the true value of their data in a more streamlined, automated, and reliable manner that would otherwise be lost in the volume, complexity, and noise attributed to the sheer quantity of data. Because of the vast amount of information available now, the ability to sift through all the noise and extract meaning is highly valuable. Without these strategies, there would be very limited ways for organizations to reap value from their data with no idea of the full AI/ML potential.

About Erica

Erica Swanson, Senior Data Scientist. Erica works with our Government Division and is currently providing data science and data engineering expertise on our Data Analytics as a Service (DAaaS) and Odin-FM projects. Erica has 10+ years of experience building scalable software solutions leveraging machine learning and statistical modeling. With a B.S. in Mathematics and a M.S. in Statistics, her areas of expertise include time-series modeling, big data architecture frameworks, and productionalizing data science applications. Learn more about Erica on LinkedIn.

For more information about how our Data Science Team can help with your organization’s data challenges, contact Janette Steets, Director of Data Science, Illumination Works.

About Illumination Works

Illumination Works is a trusted technology partner in user-centric digital transformation, delivering impactful business results to clients through a wide range of services including big data information frameworks, data science, data visualization, and application/cloud development, all while focusing the approach on the end-user perspective. Established in 2006, ILW has primary offices in Dayton and Cincinnati, Ohio, as well as physical operations in Utah and the National Capital Region. In 2020, Illumination Works adopted a hybrid work model and currently has employees in 15+ states and is actively recruiting.